Table of Contents >> Show >> Hide

- Signal DRM: When “Don’t Screenshot This” Becomes a Privacy Feature

- Modern Phone Phreaking: VoLTE, SIP, and the Return of Metadata Mischief

- The Impossible SSH RCE: When “Not Believed Exploitable” Isn’t the End of the Story

- Quick Hits: Supply-Chain Sabotage and “Impossible” Networking Tricks

- What to Do With All of This: Practical Takeaways

- Conclusion: Security Is Mostly About What You Don’t Collect (and What You Don’t Leak)

- Bonus: Real-World “This Week in Security” Experiences (Field Notes)

Security news has a funny way of rhyming. One week it’s a “new” feature that quietly takes screenshots of your life.

The next it’s a “new” kind of phone phreaking that isn’t about whistles anymoreit’s about metadata.

And then there’s the classic hit single that never leaves the charts: “This bug is probably not exploitable.”

(Spoiler: that sentence has never aged well.)

This week’s theme is simple: what your systems remember, what your networks reveal,

and what your mitigations actually buy you. We’re talking about Signal using a DRM-style screen-capture

block to protect chats from Microsoft Recall, a VoLTE/SIP metadata leak that feels like phreaking got a software update,

and a years-old OpenSSH double-free that keeps resurfacing in the “hard-to-exploit doesn’t mean safe” category.

Signal DRM: When “Don’t Screenshot This” Becomes a Privacy Feature

“DRM” usually shows up when someone is trying to stop you from doing something harmlesslike taking a screenshot of a movie

you paid for with your own money on a device you also paid for with your own money. So it’s deliciously ironic that one of

the more practical privacy moves we’ve seen recently is Signal leaning on a DRM-like mechanism to stop Windows from

capturing Signal chats.

What changed: Microsoft Recall made “what’s on your screen” a database

Microsoft Recall is designed to periodically capture snapshots of what’s on a user’s screen and make it searchablekind of a

“photographic memory” for your desktop. That may sound convenient if your biggest secret is the tenth tab of “best air fryer

recipes,” but it becomes a different conversation when the screen contains message threads, one-time codes, internal dashboards,

legal documents, medical info, or anything else that shouldn’t live forever in a searchable archive.

Microsoft has described Recall as opt-in, with controls for saving snapshots, filtering apps and websites, and enterprise policies

for retention and DLP integration. That’s important progress. But privacy-preserving apps still face an uncomfortable reality:

if the operating system can capture your window contents, it can store themunless you have a way to opt out at the OS level.

The “DRM flag” workaround: exclude the window from capture

Signal’s response on Windows 11 is a “Screen security” setting that blocks screenshots of the Signal Desktop window. The key

trick is that Windows provides APIs that allow an app to mark its window as excluded from capture. If the window is excluded,

the content doesn’t show up in Recall (or other screenshot tools). In other words: Signal doesn’t need to “beat” Recall; it needs

to convince Windows that its window is not eligible for screen capture in the first place.

The hilarious part is the naming and lineage. This approach is commonly associated with protecting DRM content (think streaming

video). But in this context, it becomes privacy DRMnot protecting Hollywood, but protecting your chats.

It’s the rare moment where DRM shows up as the hero in the third act instead of the villain in the first five minutes.

Trade-offs: security by default vs. accessibility and usability

Signal didn’t pretend this is consequence-free. Blocking capture can interfere with legitimate needs: accessibility workflows,

support troubleshooting, documenting harassment, reporting abuse, or simply saving something you’re allowed to save.

So Signal made the setting easy to toggle offbut with warnings and friction to prevent accidental “sure, record my private chats”

moments.

The deeper lesson isn’t “DRM good now.” It’s that operating systems are increasingly adding agentic or AI-enabled features that

touch everything on-screen, and app developers need granular, trustworthy controls to prevent sensitive content

from being ingested. If “mark your window as DRM” is the cleanest opt-out, that’s… not exactly a confidence-inspiring developer

experience.

Modern Phone Phreaking: VoLTE, SIP, and the Return of Metadata Mischief

Classic phone phreaking has mythology: the 2600 Hz tone, the blue box, the era when telecom control signals were easier to poke

because the network was more trusting (and sometimes comically under-documented). Modern telecom isn’t that. It’s layered,

packetized, encrypted in places, and wrapped in standards. Which is why “modern phone phreaking” often looks less like playing a

tone and more like discovering that something in the signaling path is leaking information it never should.

VoLTE is VoIP with cellular characteristicsand SIP carries baggage

Voice over LTE (VoLTE) uses the cellular data network to carry voice calls, and it relies on IP-based signaling in the IMS

(IP Multimedia Subsystem) ecosystem. SIP (Session Initiation Protocol) is a major piece of how calls get set up and routed.

SIP is also used outside mobile networksdesk phones, call centers, conferencing systemsso it comes with a long history and a lot

of optional fields, headers, and “helpful” metadata.

Here’s where the story turns: a researcher examining VoLTE call signaling for their carrier found SIP headers carrying more

identifying and location-related data than you’d want floating around. Reported examples included identifiers like IMSI/IMEI and

cell-related information that can be used to infer location (directly or indirectly, depending on what’s exposed and who can see it).

Even if you never share your location in an app, the network might still accidentally tell on you.

Why this feels like phreaking: it’s about abusing the “plumbing,” not the app

When people hear “phone hacking,” they often imagine malware on the device. Telecom incidents often live elsewhere: the signaling

plane, the carrier infrastructure, interconnect systems, debug logging, misconfigured gateways, or vendor defaults that were never

meant to be exposed beyond a trusted environment.

That’s why the “phreaking” label still fitsbecause it’s about the system behind the handset. You can have a perfectly patched phone,

avoid sketchy apps, and still be impacted if the network’s call setup messages expose data to parties that shouldn’t have it.

So what’s the risk, practically?

-

Targeted surveillance: If an attacker can obtain signaling metadata or correlate it with other data sources,

they may be able to track or narrow down where someone isespecially if cell identifiers can be mapped. -

Identity correlation: IMSI/IMEI-style identifiers can enable persistent tracking across time, even when apps

change, accounts rotate, or numbers are masked. -

Operational security failures: Journalists, activists, executives, and anyone relying on privacy tools can get

blindsided by infrastructure leakage that sits outside end-to-end encrypted apps.

The good news: responsible disclosure and carrier/vendor remediation can address these issues, and reports indicate fixes were deployed.

The less-good news: it’s a reminder that privacy isn’t only an app problem. It’s a systems problem.

The Impossible SSH RCE: When “Not Believed Exploitable” Isn’t the End of the Story

SSH is one of the most attacked services on the internet and one of the most defendedbecause everyone depends on it and everyone

has tried to break it. That makes OpenSSH vulnerability narratives especially interesting. When a flaw is discovered, the first

question is “how bad is it?” and the second question is “can it realistically be exploited?”

Those two questions are related, but they are not the same. And this week’s “impossible SSH RCE” storyline is a great illustration

of why security engineers should be cautious about the phrase “probably not exploitable.”

CVE-2023-25136: a pre-auth double-free in OpenSSH 9.1

OpenSSH 9.1 introduced a pre-authentication double-free bug (CVE-2023-25136) that was fixed in OpenSSH 9.2. The word “pre-auth” is

what makes people sit up straight: it suggests the bug can be reached before a user logs in, which expands the threat surface.

A double-free is a memory-safety issue where a piece of memory is freed twice, potentially allowing an attacker to manipulate program

behavior if they can control allocation patterns and corruption outcomes.

Official release notes characterized the issue as “not believed to be exploitable,” noting it occurs in an unprivileged pre-auth

process and is sandboxed on many platforms. That context matters. Modern OpenSSH deployments also benefit from a long list of OS and

compiler mitigationsASLR, NX, stack canaries, hardened allocators, privilege separation, chrooting, and sandboxing. The entire point

of defense-in-depth is to turn memory bugs into crashes instead of shells.

But “hard” isn’t “impossible,” and “theoretical” is still a risk category

Multiple third-party analyses have pointed out that the double-free could be leveraged to redirect control flow in certain conditions,

and researchers have explored proof-of-concepts demonstrating impact ranging from denial of service to limited exploitation scenarios

when security mitigations are absent or reduced. That doesn’t mean every OpenSSH server on the internet is one packet away from doom.

It means exploitability is a moving target:

- Mitigations differ by OS and build: what’s “not believed exploitable” in one environment may be less protected in another.

- Attack primitives evolve: new heap exploitation techniques can turn yesterday’s dead-end into tomorrow’s exploit chain.

- Operational reality is messy: custom builds, legacy platforms, unusual configs, and partial patching are everywhere.

The result is a practical security lesson: exploitability assessments are valuable, but they’re not warranties. If you’re responsible

for an exposed SSH service, patching is still the right moveeven when the write-ups are filled with “unlikely,” “difficult,” or

“theoretically possible.”

Where AI fits in (and where it doesn’t)

There’s also a modern subplot: people keep asking whether AI will make exploitation trivial. The realityat least todayis more

boring and more useful. AI is increasingly helpful for reading code, summarizing behavior, assisting reverse engineering, and

catching mistakes. That matters for defenders and researchers. But turning a heavily mitigated memory bug into a reliable remote code

execution exploit is still deeply technical, environment-dependent, and unforgiving of hand-wavy reasoning.

In other words: AI can help you become faster at the parts of security work that look like “understanding,” but it hasn’t replaced the

parts that look like “engineering.” The “impossible SSH RCE” discussion is a good example of why.

Quick Hits: Supply-Chain Sabotage and “Impossible” Networking Tricks

Because no security week is complete without at least one story that makes developers stare at their dependency tree like it’s a haunted

family portrait.

Silent npm sabotage: the slow-burn supply-chain problem

Research teams have flagged npm packages that stayed available for long periods, accumulated downloads, and contained destructive behavior

designed to corrupt data, delete files, or crash systems. What makes this category nasty isn’t just that it existsit’s that it can be

subtle. If a malicious package is built to trigger later or cause “intermittent” failures, it blends into the daily chaos of software

engineering. The team assumes it’s a flaky test, a race condition, or a bad deploy… right up until it isn’t.

“Impossible” TCP sessions: when the network lies convincingly

A separate research thread explored tooling that automates parts of spoofing and session establishment using classic local-network

techniques (think ARP-based deception). The key takeaway isn’t “go do this.” It’s that networks still contain trust assumptions, and

attackers love automating the boring steps. If your environment assumes “source IP = identity,” it’s time for an uncomfortable meeting

with reality.

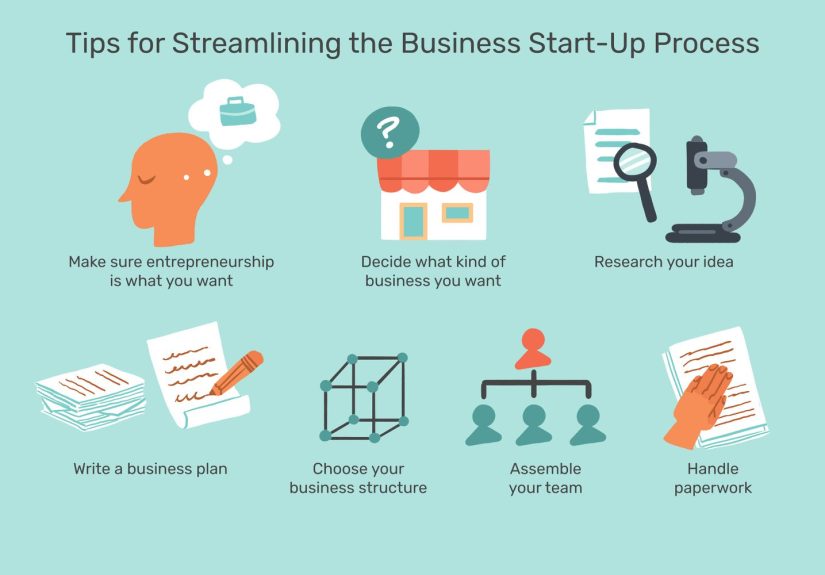

What to Do With All of This: Practical Takeaways

-

Treat on-screen data as exportable by default. If your threat model assumes “it’s fine because it’s only on the screen,”

Recall-style features challenge that assumption. Prefer controls that let apps and users exclude sensitive windows from capture. -

Use OS-level protections where available. Window capture exclusions and DLP integrations are imperfect, but they are still

better than pretending the OS is always a neutral bystander. -

Remember that privacy depends on infrastructure. End-to-end encryption protects message content, but metadata leaks in

telecom signaling can still create serious risk. Pressure for better carrier hygiene and standards compliance matters. -

Patch OpenSSH even when the exploit sounds “impossible.” “Not believed exploitable” is not the same as “safe to ignore.”

Defense-in-depth reduces risk; it doesn’t eliminate it. -

Harden your supply chain like you mean it. Dependency pinning, lockfile hygiene, provenance signals, review for

install scripts, and monitoring for anomalous behavior won’t solve everything, but they reduce your blast radius.

Conclusion: Security Is Mostly About What You Don’t Collect (and What You Don’t Leak)

This week’s stories look different on the surfaceDRM flags, SIP headers, and double-freesbut they’re all about the same thing:

systems quietly collecting or exposing more than they should. Signal’s DRM workaround is a reminder that privacy sometimes means

using the platform’s own rules against its overreach. VoLTE metadata leakage shows how “the plumbing” can undo app-level privacy.

And OpenSSH’s “impossible” exploitation debate reinforces a classic truth: mitigations are great, but patching is still non-negotiable.

The best security outcome is boring: fewer snapshots, less metadata, and patches applied before anyone has to learn a new acronym the hard way.

Bonus: Real-World “This Week in Security” Experiences (Field Notes)

If you’ve ever worked in or around security, you know the headlines are only half the story. The other half is the lived experience:

the Slack threads, the ticket queues, the late-night “is this real?” pings, and the slow realization that the problem isn’t just

one vulnerabilityit’s how humans and systems behave under pressure.

For example, features like Recall tend to trigger two reactions at the same time: excitement and dread. Product-minded folks see a

productivity dreamsearch your past work, recover context, find that one snippet you swear you saw yesterday. Security-minded folks

see a new data lake that happens to be made of screenshots. In practice, organizations often end up doing a fast internal audit:

“What’s on employee screens all day?” The answer is usually everything: customer data, internal tools, credentials in password managers,

incident dashboards, legal docs, and private chats. Even if a feature is opt-in, the mere existence of a searchable screenshot archive

changes how teams think about endpoint risk.

The Signal angle also feels familiar in a very specific way: privacy teams routinely discover they’re being forced to bolt on safeguards

because the platform didn’t provide the right controls up front. It’s the same story in different outfitsbrowsers add tracking protections

because the web didn’t, messaging apps add screenshot warnings because users needed them, and now desktop apps add capture exclusions because

the OS is capturing too much. In day-to-day operations, this becomes an “edge case tax”: support has to explain why screenshots are blank,

accessibility teams have to test whether assistive tools still work, and users have to learn one more setting that matters.

On the telecom side, modern “phreaking” stories usually land with a thud because they remind people how little control they have.

When a carrier-side leak happens, most end users can’t patch it. They can’t toggle a privacy option that changes signaling behavior.

They can’t “use a different app” to fix it. The experience for many people is learning that privacy is not only about what you do on your phone,

but what the network does about your phone. For risk-heavy rolesjournalism, activism, executive securitythe usual response is to layer controls:

device hygiene, careful operational practices, and sometimes even changes in how and when phones are used. It’s not glamorous; it’s pragmatic.

And then there’s the OpenSSH pattern, which veteran engineers recognize instantly: the vulnerability drops, the first wave of chatter says

“this is catastrophic,” the second wave says “actually it’s hard,” and the third wave is a quiet, disciplined patch rolloutbecause the people

who run real systems don’t bet their uptime on optimism. The most common experience isn’t panic; it’s prioritization. Teams check which versions

they run, where they expose SSH, what compensating controls exist (network restrictions, MFA, jump hosts), and how fast they can patch without

breaking production. The phrase “not believed exploitable” might lower the temperature, but it rarely ends the conversation.

Finally, supply-chain incidents create a special kind of fatigue. Developers want to ship. Security teams want to reduce unknown code execution.

Leadership wants both, plus a timeline and a confidence score. In reality, the win often comes from small habits repeated consistently:

fewer dependencies, tighter review of install scripts, basic monitoring, and a healthy skepticism of “tiny helper libraries” that ask for a lot

of trust. The emotional experience is usually less “Hollywood hacking” and more “why is my build pipeline talking to a weird endpoint?”

That’s the modern security vibe: not dramatic, but deeply consequential.

If this week had a single lesson from the trenches, it’s this: security isn’t only about stopping attackers. It’s about shaping systems so they

don’t casually create new piles of sensitive data, new metadata trails, or new “probably impossible” exploit opportunities.